Data Lakes: Reconsider How You Store (un)Structured Data

By: Ciopages Staff Writer

Updated on: Feb 25, 2023

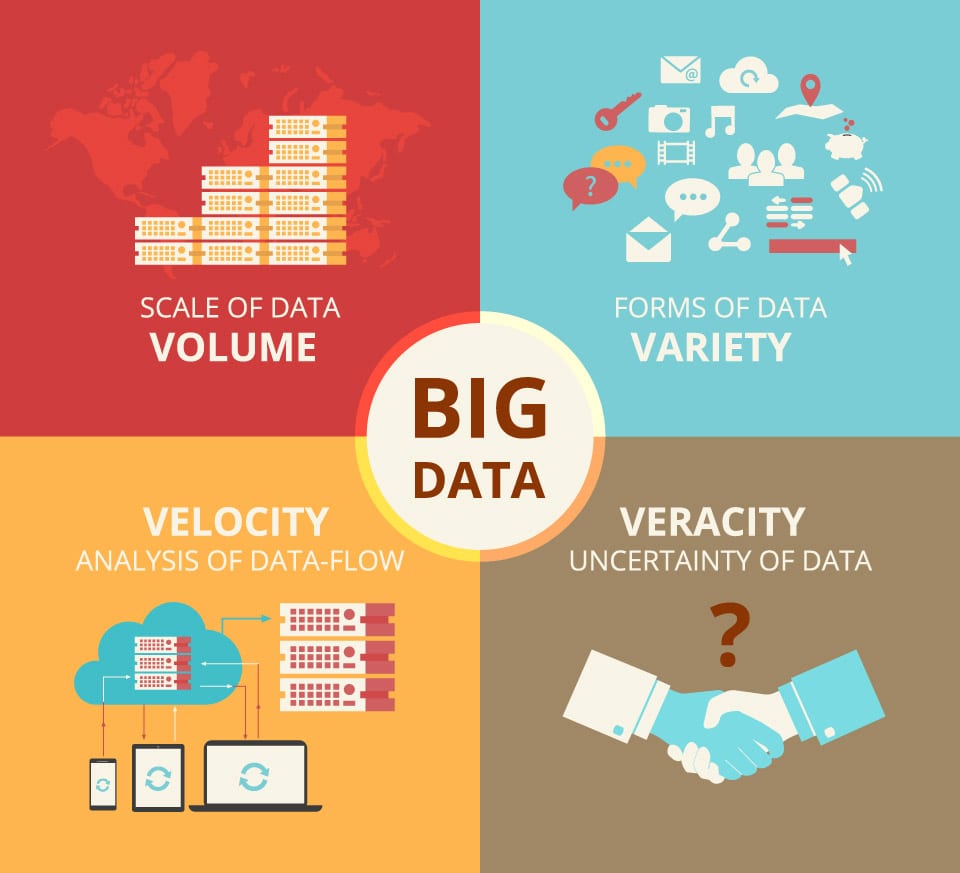

Data Lakes are a new way to store both structured and unstructured data. Companies began talking about “Big Data” for at least 10 years, so why hasn’t anyone nailed the ideal way to capture, organize, retrieve, visualize and analyze data? The 3 V’s of Big Data–high volume, high velocity and high variety–make for a challenge that businesses all over the world are facing as the influx of information increases exponentially. Even structured data formations such as data warehouses are groaning under the effort of wrangling micro-transactions such as website access from a specific IP address, and the attempt to add meaning to each data particle is of increasing importance. User experience is a critical differentiating factor for all businesses and the emphasis on crafting a seamless digital experience is bleeding over into augmented reality at a frightening rate for data analysts.

As marketers see the potential behind Big Data and the front-end tools catch up to the data-gathering potential, analysts are increasingly asked to find and extract nuggets of content from within a whirlwind of information. Details that would have been nearly impossible to track only a few years ago are now tied together with ease–such as a single individual’s customer journey across multiple platforms: web, in-store, phone and mobile. Stitching this information together requires complicated analytical tools and a constantly-morphing system that has a structure flexible enough to allow for new data sets while fixed in such a way that it can be queried logically.

What is this magical data structure? Some information gurus argue that the data warehouse that we all know and love is a thing of the past, and that data lakes are the new paradigm that allows marketers and business analysts access to the information that they crave. If you’re not quite up to speed on data lakes, here’s a primer that may have you reconsidering how you store your data.

Data Lakes Defined

Simply put, data lakes are a way to store different types of uncategorized data in a massive repository “as is” for later retrieval as part of an extended business intelligence and analysis ecosystem–essentially, a big staging area for data that is waiting to be accessed.

Speeding up the delivery of insights and information to business users is something that fascinates technology leaders throughout the world. The intricacy of modern data and the entanglement required with myriad systems can equate to a series of ever-higher hurdles for users to jump before they have quick and direct access to valuable and usable data. Simply put, data lakes are a way to store different types of uncategorized data in a massive repository “as is” for later retrieval as part of an extended business intelligence and analysis ecosystem–essentially, a big staging area for data that is waiting to be accessed.

Core Functionality of Data Lakes

As essentially unstructured data storage utilities, data lakes do offer some core functionality benefits over data warehouses. The flexibility of the system offers users on-demand access to critical data, and the ability to choose which tools they use to access that data. Adding information to a data lake is much less time-consuming than ensuring that data is accurately structured and processed before being added to a data warehouse. Data lakes can provide a more “true” vision of the unprocessed data–users can trust that the data is original and has not had transformations applied that could change its innate value. Finally, data lakes provide easier access to data for the purposes of analysis and data modeling. Machine learning becomes easier as users are able to aggregate data from a variety of internal, external, secure and open sources in one trusted location.

Data Lake or Data Warehouse?

Unlike a data warehouse that utilizes a strict hierarchical system to store highly-structured data, a data lake holds raw data in its native format in a flat architecture. Perhaps one of the best ways to define the difference between a data lake and a data warehouse, is that the data stored in data lakes does not have any requirements or schema associated with them before the data is queried (schema-on-read)–whereas in a typical data warehouse, the structure is defined as a rigid set of tables before the data is added or imported (schema-on-write).

“Big data and measurement is starting to gain traction. Big data has long been a technology discussion, but I’m starting to see more and more inquiries about how firms are successfully integrating big data sources into their core consumer measurement techniques.” Tina Moffett – Forrester

This is an important difference; mainly because it means that you’ll need to have an idea what type of data you will need and have available before you build or add on to a data warehouse, whereas a data lake can grow more organically. While some believe that data lakes can muddy the waters and limit the usefulness of data, while others feel that data lakes have an enormous untapped potential that individuals familiar with traditional BI tools and data warehouses cannot fathom.

The Hype and Reality of Data Lakes

While business stakeholders and technology leaders are still scratching their heads over whether data lakes are the right structure (lack of structure?), there can be no denying the need for Big Data. The reality is that business professionals are clamoring for fast access to mission-critical information, and that data structures are changing in an ever-increasing cycle. Data lakes may be a cost-effective and agile way to collect structured and unstructured data and have it available when and if it is needed. Data gathering has moved beyond transactional and relational data, and data lakes seem to provide organizations with a way to aggregate semi-structured and unstructured data including videos, audio, content from social media, clickstream and more.

Data lakes are sometimes marketed as a panacea. However, as any other solution, technology alone is never the answer.

“Getting value out of the data remains the responsibility of the business end user. Of course, technology could be applied or added to the lake to do this, but without at least some semblance of information governance, the lake will end up being a collection of disconnected data pools or information silos all in one place.” – Andrew White, Gartner

Foundational Features of Data Lakes

Original data lakes were founded on Apache Hadoop–the free, Java-based programming framework that allows large data sets to be processed in a distributed computing environment. There are two key parts to a traditional Hadoop implementation: a distributed file system for data storage (HDFS) and a default framework for data processing (MapReduce). Hadoop utilizes both SQL and NoSQL languages to pull data for analysis. While not the only solution, Hadoop is still the most widely-used system used for storing and managing large amounts of data quickly.

Project Considerations

The considerations before taking on a data lakes project are myriad: ingestion workflows must be configured, metadata management and integration with existing environments is critical to ensuring the ongoing usability of the data. Before you embark upon a data lakes project, it’s important to work with experts who can help architect the environment, define user interfaces, explore data intake opportunities and develop a proof of concept before full launch. Determine whether or not your organizational structure, rules and governance will be supported by the operating model. Understanding not only how the data is gathered and aggregated, but offering a user-friendly way for analysts and marketers to retrieve the information are crucial for project success.

Moving from a highly-structured environment such as a data warehouse to an unstructured pool of information such as a data lake is a tremendous leap for an organization. However, it does offer the opportunity for users to gain quick access to a massive range of data at a lowered storage cost and with improved overall system performance. While data lakes may not be the right structure for all organizations, it will be interesting to watch how smart and savvy technology professionals leverage this relatively new data structure to define a new era of data interaction.

Are you using Data Lakes? Do you think it is a new paradigm? Or old wine in a new bottle?